Everything in Noema is now free forever—update to 1.4 for unlimited search and performance boosts.

Run small models locally smarter by pairing them with the data you choose.

Upload your PDFs, enable tools, and get answers based on your specific content. Works offline. Stays private.

Privacy-First AI for iPhone

Noema is a privacy-first AI companion built for iPhone, giving students and power users powerful tools without subscriptions or constant internet access.

By downloading lightweight local models and pairing them with curated datasets from the Open Textbook Library, Noema bridges the gap between limited model size and high-quality knowledge, enabling accurate, context-rich answers.

Built-in Viewer

Supports PDFs, EPUBs, Markdown, and JSON, making it easy to study and reference material directly in the app.

Power User Features

Explore GGUF, MLX, and Leap models, fine-tune settings, and enable tool-calling for privacy-preserving web lookups through our self-hosted SearxNG relay—all while staying secure in off-grid mode.

Whether as a local AI tutor or a customizable AI workspace, Noema puts control and knowledge directly in your hands.

Retrieval-Augmented Generation

Advanced AI with your own data, completely offline

In-App Dataset Library

Direct integration with Open Textbook Library — browse and import complete resources directly inside the app.

Bring Your Own Data

Import personal documents, textbooks, research papers in TXT, PDF, or EPUB formats for complete offline access.

Local Embedding & Indexing

Convert datasets into efficient numerical representations stored in a compact on-device vector database.

Smart Context Injection

Retrieve the most relevant dataset chunks and inject them into prompts for grounded, accurate responses.

Low-RAM, High-Knowledge Advantage

Noema's RAG system revolutionizes LLM use on low-memory devices by shifting knowledge storage into compact datasets rather than bloated model weights.

Always Available

Once a dataset is imported, it works anytime, anywhere — no connectivity needed. Your knowledge travels with you.

Triple Backend Support

A first for mobile LLM applications - Noema's triple backend support allows users to run the largest and most diverse model library possible

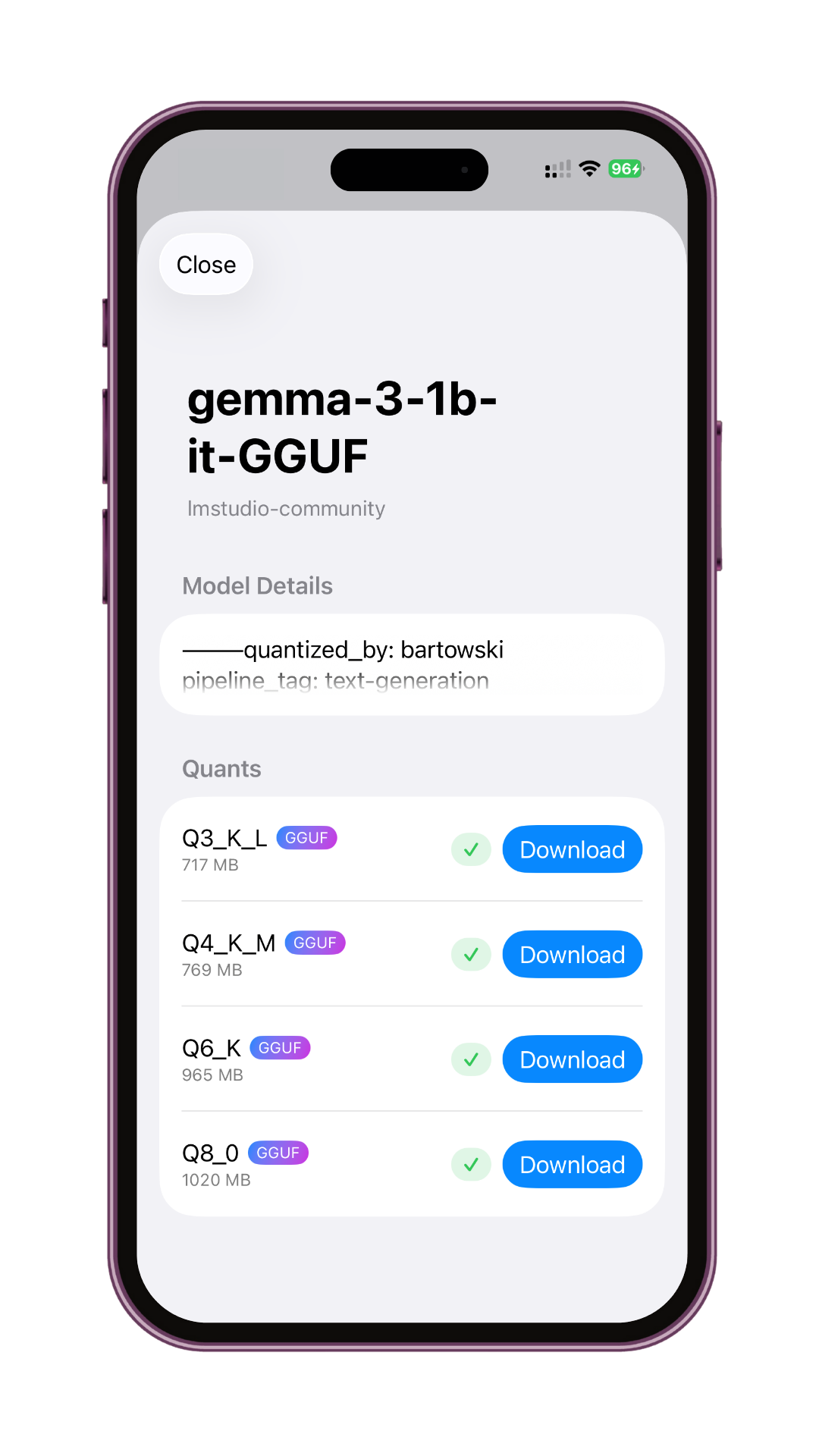

GGUF Format Support

Run powerful open-source language models locally with full support for the GGUF format: optimized for fast inference, low memory usage, and complete offline access. Whether you're on desktop or mobile, GGUF enables high-performance AI with customizable quantization and broad compatibility across platforms and backends.

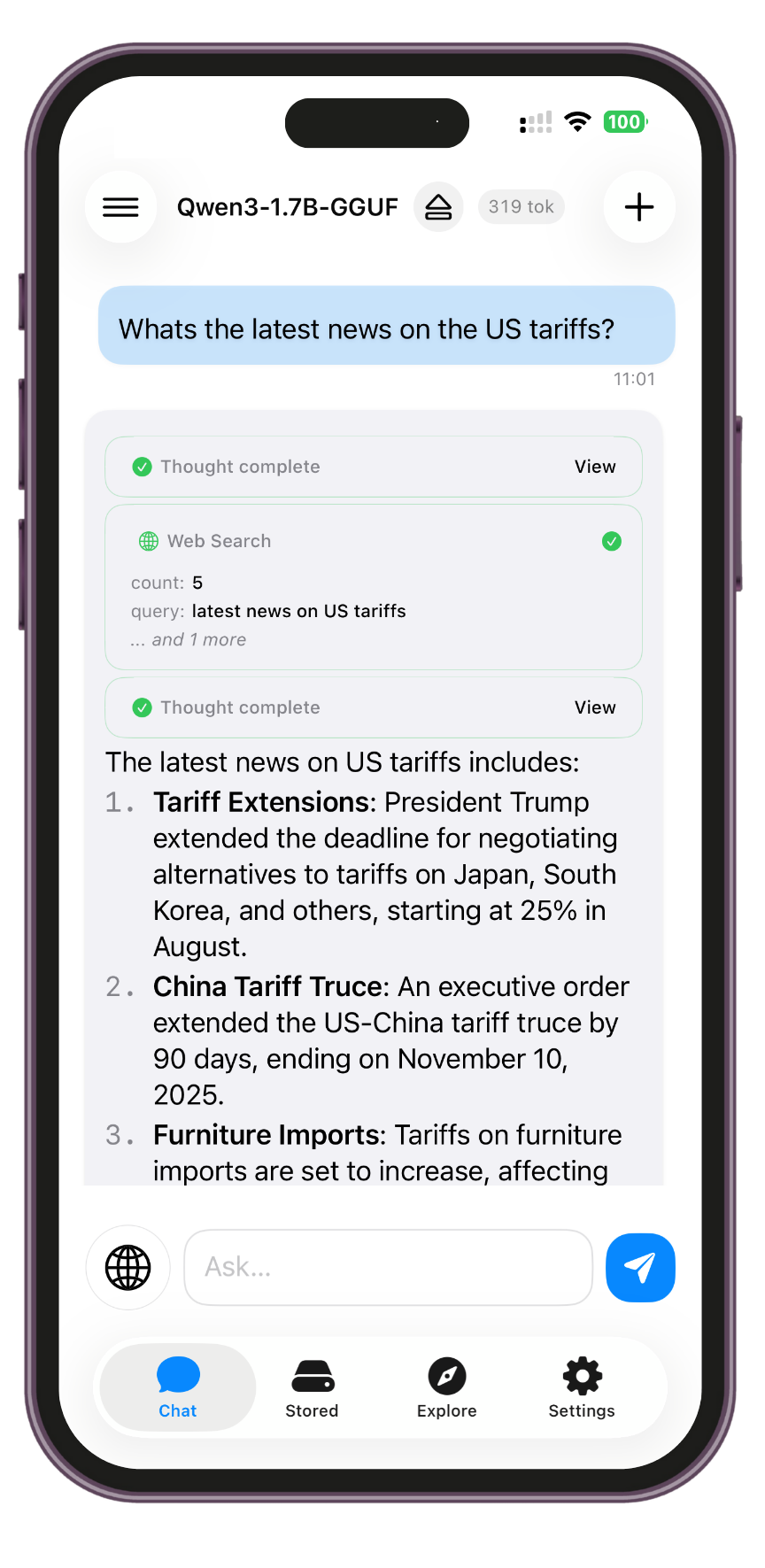

See Noema in action

Experience the intuitive interface that makes running local AI models effortless

Effortless Model Management

Browse, download, and organize your AI models with our intuitive interface. Integrated Hugging Face search makes discovering new models a breeze.

- One-click model installation

- Automatic dependency management

- Real-time download progress

Intelligent Conversations

Experience seamless AI conversations with advanced context understanding and tool calling capabilities.

- Advanced context awareness

- Built-in tool integration

- Dataset RAG integration

Customizable AI Experience

Fine-tune your AI models with advanced settings and personalization options for optimal performance.

- Custom model parameters

- Performance optimization

- Privacy-first approach

Model Context Protocol Integration

Search is included with partial MCP integration coming soon. Enhanced context awareness and tool integration will make your AI conversations more intelligent and productive.

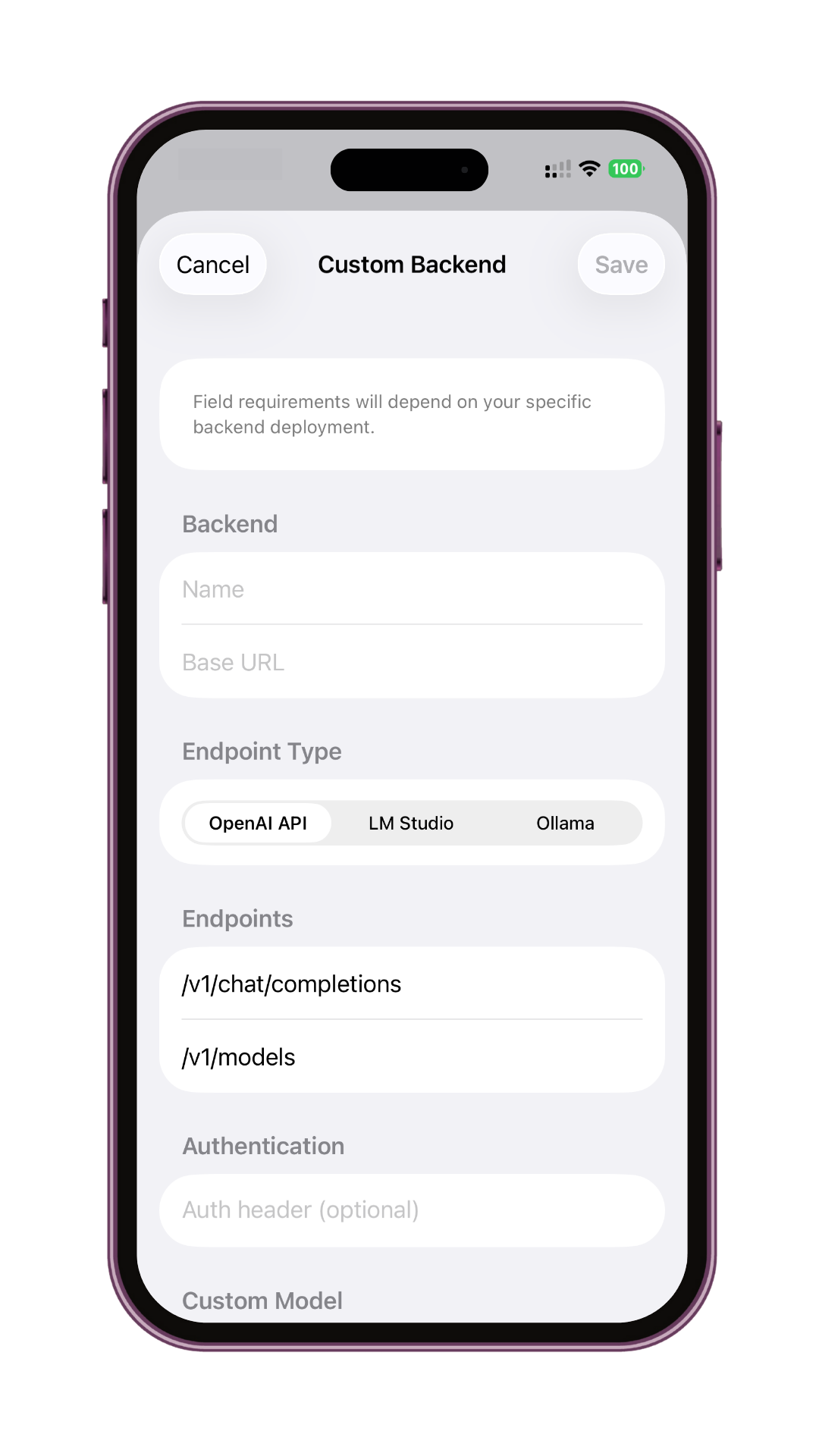

Bring remote inference into your local workspace

Noema now bridges the gap between machines. Register HTTP-accessible backends to chat with the models you run on another desktop, rack server, or cloud VM without leaving your trusted Noema environment.

Connect from any device

Register remote desktops or servers and talk to the models they host through Noema's unified chat interface.

Provider-aware defaults

Dedicated profiles for OpenAI API, LM Studio, and Ollama endpoints surface provider metadata like quantization, architecture, and tooling support.

Safety checks built-in

Connection summaries and status chips keep you informed, while Off-Grid mode pauses network traffic when you need to stay offline.

Just HTTP

Point Noema at any standards-compliant inference endpoint using custom paths, headers, and stop sequences tailored to your deployment.

Guided setup for remote models

Choose a backend type to pre-fill paths, validate required fields, and capture the exact authorization header that each request should use.

Slides auto-advance every 5 seconds. Hover to pause.

Powerful Features for AI Excellence

Experience the future of AI interaction with our comprehensive suite of tools and capabilities

Model Context Protocol (MCP)

Search tool included for now with partial support for custom MCPs. Advanced tool calling protocol that enables your AI model to autonomously search and perform agentic actions.

Advanced Dataset Integration

Access Open Textbook Library resources through RAG without increasing context usage or requiring model finetuning. Retrieve relevant information on-demand while keeping your model lightweight and efficient.

RAG (Retrieval Augmented Generation)

Upload your own documents to use with RAG when working with larger context requirements. Query your personal knowledge base for contextually relevant answers without hitting context limits.

Built-in Tool Calling

Execute functions and interact with external tools seamlessly, expanding your AI's capabilities with real-world actions and integrations.

GPU Acceleration

Harness the full power of your hardware with optimized GPU support for lightning-fast inference.

Privacy First

Your conversations and data never leave your device. Complete offline functionality guaranteed.

Seamless Chat Experience

Intuitive interface inspired by the best chat applications, designed for productivity.

Easy Model Installation

Download and set up models effortlessly with our streamlined installation process.

Smart Model Discovery

Find the perfect model for your needs with intelligent search and recommendations.

Free Forever, For Everyone

Noema is committed to democratizing AI. That's why our entire experience is now completely free—unlimited web search, remote endpoints, FlashAttention, and every other feature come standard for everyone.

Ready to transform your AI experience?

Start training AI models with your own data. Experience advanced tool integration and personalized intelligence - right on your iOS device.